Web Scraping: The Biggest Data Source for Machine Learning

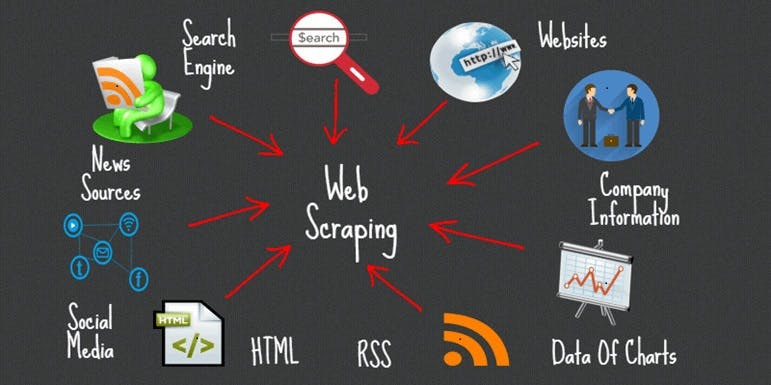

There is a huge amount of data on the web, there are terabytes added every day and older data continues to be updated on a regular basis. As part of the machine learning process, web scraping data from different websites is required to make accurate decisions for companies and research departments. There is no doubt that it is one of the best data sources for the development of Machine Learning (ML) based algorithms. It's important to remember that between your machine learning algorithms and the data on the web there lies a complex process. Today, we will discuss how to overcome this hurdle.

Scraping Web Data For Machine Learning

The following points should be checked before you go about scraping the data for machine learning, in case you are going to scrape data for machine learning.

* Format of data

The machine learning models can only be used with data that is tabular or in a table-like format. The scraping process for unstructured data will, in turn, require a greater amount of time to process the data before it can be used.

* List of Data

In order to accomplish the main objective of machine learning, you must list the data points or sources you are aiming to scrape from the websites or webpages you are planning to scrape. Depending on the situation, there may be a large number of data points that are lacking for each webpage, at which point you will need to scale down and choose data points that are the most prevalent. A good reason for this is that if you train and test your machine learning (ML) model with too many NA or empty values, then you will reduce the performance and accuracy of the model.

* Flag Issues

The data will be scraped and updated in the system 24 hours a day by 7 days a week, if you are creating a continuous data flow. Make sure you have a way to monitor when and where the scraping algorithm breaks so that you can take action accordingly. In reality, there are a number of reasons as to why this happens, one of which is that website owners are changing the U, or a webpage has a separate structure from others on the same site. It is important to manually fix any breaks when they occur. As time passes, your code will become more capable of handling different scenarios and diverse websites that provide you with data.

* Data Labelling

This can be one of the most challenging aspects of data labeling. But if you can gather required metadata while data scraping and store it as a separate data point, it will benefit the next stages in the data lifecycle.

Cleaning, Preparing and Storing the Data

While this step may look simple, it is often one of the most complicated and time-consuming steps. It is due to the fact that there is no one-size-fits-all method of doing things. Data that you scraped and from which you scraped it will vary based on the kind of data you have scraped. To clean the scraped data, you will need to utilize specific techniques. As a first step, you will need to sort through the data manually so that you can identify any impurities that may be present. The easiest way to do this is by using a Python library such as Pandas (which can be downloaded to your computer). It is essential that you write a script once your analysis has been completed so that you can remove the imperfections in the data sources and normalize the data points that are not in line with the others. Upon finding all of the data points in a single data type, you would perform important checks to ensure that the data has been properly transferred. The column in which numbers are supposed to be stored cannot contain a string of characters. An array that is supposed to hold data in dd/mm/yyyy format cannot store data in any other format like, for example, dd/mm/yyyy. The next step is to identify and fix anything else that might cause the processing of the data to be affected, such as missing values, null values and anything else that could prevent it from working properly.

* Data Fields Web Scraping

It is recommended to use data fields that can have a set of n values (For example, say you have a column for gender that can contain m, f, and o, or a column for veteran status that can hold a binary value such as True or False). These values need to be converted to numbers before you can use them. The binary values can be converted into 1 or 0 depending on what they represent. Where there are n options you can use values from 0 to n. You can use the range from 0 to n for values that are large or can be large, for example, you could have a field with values in millions of rupees, which you can scale down to 1- 3 digits. There are a number of operations on data that can be performed later on to help process the data and help you create more efficient models.

* Issues with data storage

It is important to consider that data storage can be a whole problem statement on its own. Depending on the data format and the frequency with which business systems access data. The most cost-effective cloud data storage service available to you is the service that enables you to store your data in the cloud. There are 3 types of tabular data: data with almost the same number of data points in every row; and data with irregular rows. It is possible to build a relational cloud database, for instance Aurora PostgreSQL, by using a cloud-based relational database. In the NoSQL option, you have varying data points for every single row. The best option is Dynamo Db, a managed database provided by Amazon Web Services (AWS). It is very useful for storing and accessing large quantities of heavy data such as files and images that one may need to store on occasion. S3 containers are a great way to use cheap object storage services, since they offer S3 containers. Creating a folder in an S3 bucket and using it to store data is just like if you were using the same thing on your local machine. Computer Libraries and Algorithms for Machine Learning The Python programming language is one of the most popular languages used today for data science and machine learning coding. A number of third-party libraries are available that are heavily used for building machine learning (ML) models. It is generally agreed that Scikit-Learn and TensorFlow are the two most popular libraries for building models from large sets of data in tabular format with only one line of code. You may already know which algorithm you want to use if you already know which algorithm you want to use. In your code, you can also specify that you wish to use CNN, Random forest, or K-means, for example. In order to implement an algorithm in code form, one need not have the inside knowledge of how the algorithm works or how the math behind it works. There is no evidence to suggest that traditional machine learning algorithms perform better when the amount of data at hand rises exponentially. There was no problem with this because traditional data sets did not have the size that would have an effect anyway. It is not surprising however, that the use of web scraping is on the rise along with the collection of big data from IoT devices. It has played a very important role in the development of neural networks that perform as well as other algorithms when the dataset has a very small size. When the dataset size increases, the performance benefits of neural networks become more evident.

What Lies Ahead For Web Scraping?

There is an endless supply of information on the web, and that will continue to be a vital data source for testing several machine learning algorithms. It is true that as the size of data grows, we will have to make use of newer algorithms to be able to process it at a faster rate. This is because we currently build models every month that require a lot of computer power. Hopefully in the near future, artificial intelligence models will be able to benefit from larger datasets than they do now. This should allow them to provide much better predictions. As a fully managed web scraping service provider, Crawlbase is able to address both the big data needs of enterprises and start-ups equally. We would like to hear your valuable feedback on the content you read. Please leave us a comment in the section below if you liked it.